科研讲座

Towards Combining Statistical Relational Learning and Graph Neural Networks

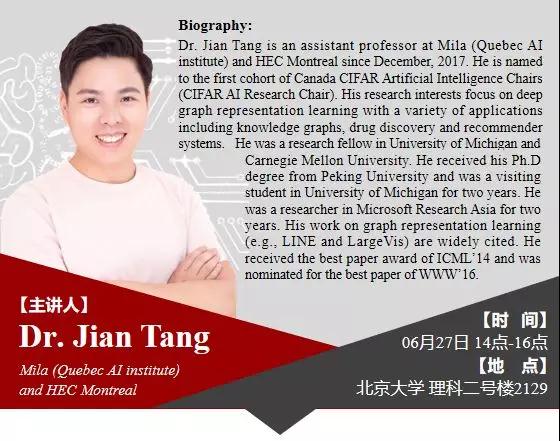

主讲人:Dr. Jian Tang

单位:Mila (Quebec AI institute) and HEC Montreal

Developing statistical machine learning methods for predictions on graphs has been a fundamental problem for many applications such as semi-supervised node classification and link prediction on knowledge graphs. Such problems have been extensively studied by traditional statistical relational learning methods and recent graph neural networks, which are attracting increasing attention. In this talk, I will introduce our recent efforts on combining the advantages of both worlds for prediction and reasoning on graphs. I will introduce our work on combining conditional random fields and graph neural networks for semi-supervised node classification (Graph Markov Neural Networks, ICML'19) and also recent work on combining Markov Logic Networks and knowledge graph embedding (Probabilistic Logic Neural Networks, in submission) for reasoning on knowledge graphs.

In this talk, I’m going to this year's ICML paper (GMNN: Graph Markov Neural Networks). We studied semi-supervised object classification in relational data, which is a fundamental problem in relational data modeling. The problem has been extensively studied in the literature of both statistical relational learning (e.g. Relational Markov Networks) and graph neural networks (e.g. Graph Convolutional Networks). Statistical relational learning methods can effectively model the dependency of object labels through conditional random fields for collective classification, whereas graph neural networks learn effective object representations for classification through end- to-end training. In this paper, we propose Graph Markov Neural Network (GMNN) that combines the advantages of both worlds. GMNN models the joint distribution of object labels with a conditional random field, which can be effectively trained with the variational EM algorithm. In the E-step, one graph neural network learns effective object representations for approximating the posterior distributions of object labels. In the M-step, another graph neural network is used to model the local label dependency. Experiments on the tasks of object classification, link classification, and unsupervised node representation learning show that GMNN achieves state-of-the-art results.

Dr. Jian Tang is an assistant professor at Mila (Quebec AI institute) and HEC Montreal since December, 2017.He is named to the first cohort of Canada CIFAR Artificial Intelligence Chairs (CIFAR AI Research Chair).His research interests focus on deep graph representation learning with a variety of applications including knowledge graphs, drug discovery and recommender systems.

He was a research fellow in University of Michigan and Carnegie Mellon University. He received his Ph.D degree from Peking University and was a visiting student in University of Michigan for two years. He was a researcher in Microsoft Research Asia for two years. His work on graph representation learning (e.g., LINE and LargeVis) are widely cited. He received the best paper award of ICML’14 and was nominated for the best paper of WWW’16.